eCommerce Aggregator¶

Introduction¶

Users experience a difficulty switching between tabs, manually visiting different website to check different products and prices. Our team helped one of our client to solve aggregation issue in eCommerce domain. Focus of the project was to:

Allowing users to search based on natural queries

Aggregate products from 100+ sites onto one platform

Providing analytics & monetization tools

Key challenges¶

Dealing with un-structured data, no single source of API

Monitoring Prices & Out of Stock Status near real-time

Building a machine learned automatic classification model

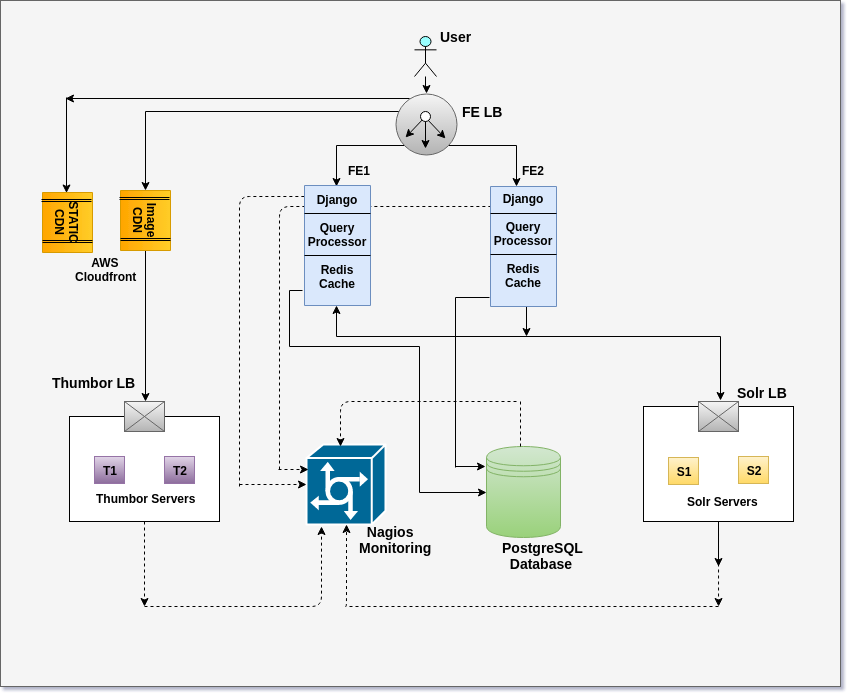

Architecture¶

Data Collection¶

A typical eCommerce Aggregator has to collect data relating to:

Products across diverse categories

Deals & Special promotions from various partner sites

Banners for AdServer

eCommerce sites are built using frameworks/SAAS services like Magento, Shopify or WooCommerce.At times syncing product catalog with aggregator become tricky because API access may not be readily available. The only option in this case is to crawl individual sites, in do so one is faced with following challenges:

Variations in HTML of different websites

Difficulty in scraping because of client side MVC framework {E.g. React.js}

Frequent changing in layout by partner sites

Rate limiting filter on partner sites

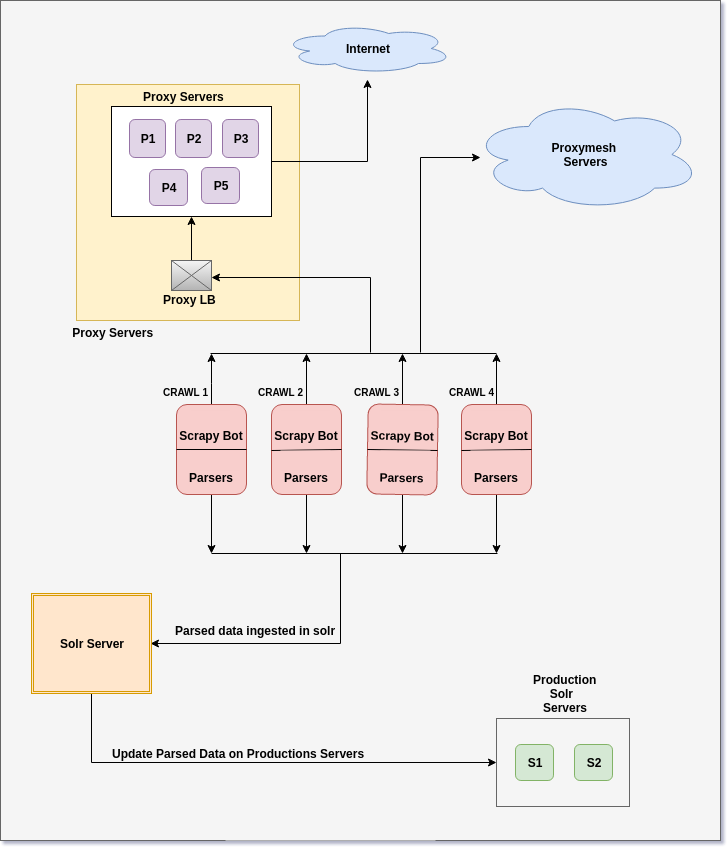

For handling this scenario, we had developed custom crawler with following features:

Unified infrastructure to crawl from both APIs and Website

Ability to crawl Deals and New Arrivals on daily basis

Ability to scale crawling using distributed setup

Custom Proxy Farm to solve rate-limiting issue

Test suite to report breakage of parsers

Auto Categorize products based on heuristics at crawl time

We had come-up with this solution after evaluating of existing opensource crawlers like Nutch. With our current setup we were able to process crawl cycle of about 3,000,000 pages in about 4 days over cluster of 5 nodes

Price & Out of Stock Monitoring¶

Upto-date prices are key while aggregating products from multiple sites. This is one of the key reasons why we had to put special engineering effort into making sure prices are updated regularly. Another sub-problem that needed to be solved while addressing price updates was to make sure products that were ranked higher on search engine were available in stock. Basically we need to keep track of product availability on source site.

These issues were solved by:

Identification of categories with high volatile prices. Our research found Electronics and Fashion Categories {Specially Women Fashion} were volatile

Periodic prices updates both Volatile and Non-Volatile categories

Real-time price checks: When a user clicks on product details we use to perform price check in real time

All these steps allowed us to give better user experience and keep out catalog fresh

Custom Search Engine Features¶

Search is an important differentiator for most online businesses. In computer science it is an active area of research. To make improvements on search engine key focus areas include:

Understanding users intent

Making search engine resilient to typos

Creating a ranking model that aligns Business and Users preferences

Giving structured response to ambiguous queries

Lets say a user types in

blue checked shirt

blue checked shirts for men

blue checked shirts for women less than 2000

The intent in these three cases is different, a traditional keyword based search would have treated all the three results almost the same. Our custom implementation of search engine handled this issue by identifying following attributed in a query

Product Category

Color

Special Attributes {E.g. 4g compatible mobiles, 24gb storage phones}

Gender

Price Quantification {including ranges E.g. between 300 and 500}

Brand

Users will not always type in the right query, including the correct spelling the onus of figuring out what the user meant is huge, let look at some of cases that a typical search engines have to handle:

Typos: {E.g. bleu shirt when they actually meant blue shirt}

Synonyms {E.g. black blazer and black suit}

Specific model {E.g. Moto X Play}

Query Segment at wrong place {E.g. samsung suit case as opposed to samsung suitcase}

Dealing with abbreviated units {E.g. GB, Gigabytes}

These issues are generally taken care of with special run-time string transformations that make a query more consistent to indexing scheme.

Ranking is a core component that needs to get right, it needs to be balanced in a sense Business Objective and User Experience Objectives are met. Lets say as part of key partnership Business might want to promote a brand even when the user is looking to purchase competing brand. How do you rank products in that case?

Machine Learned models are frequently use technique, however they do have few limitations that we faced while experimenting during project

Frequent re-training: When adding a new product category, we may need to update model for our ranking algorithm

Difficulty in bootstrap: Labeled dataset is often needed and we may need to keep updating when you’re starting off this might be difficult to come-by

High runtime computation requirement: This is specially true if you’re using ensembled models

After we experimented with different methods, we came up with a simple linear score based method which solved our requirements. These factors included

Site specific boosting factor

Brand specific boosting factor

Freshness

Based on these scheme we achieved our objective of flexibility and control while implementing our custom ranking model.

Recommendation Engine¶

Related products & query is a feature that allows cross selling of products and services. To improve engagement effort is put into these features

During this projects following are zones on which we have implemented recommendations

Deals & Offers

Products Detail Page

Search Engine Results Page {related Categories}

Since often in eCommerce users browser without logging in we had to use Content Based Recommendation, this allowed to find related products when a user looks at a product. Few customization in our Engine behaviour were done for Fashion we wanted to show more diverse results {E.g. When shopping trousers we also wanted to show Belts, Shoes etc}. For electronics categories we avoided this diversification.

Analytics & Monetization¶

Metrics for every product needed to be collected:

Basic Web Analytics {E.g. Visits, Pages/Session etc}

Engagement Metrics {E.g Searches/Sessions, Clicks/Session}

Few Business metrics were also needed for Affiliate Management. E.g. any time a user was referred to a partner site we had to maintain log. This was done for compliance with Revenue Sharing Agreement and any Business Contracts we might have with the partner site.

Operational metrics were also collected for checking Server Operations, Crawl related metrics. Number of products going out of stock.

An Adserver was also configured to run Banner campaings earning revenue for project.

Software Components Used¶

Django: Pythonic Web Framework for building Web Applications

Django REST Framework: Building APIs that other applications can use to talk to Registry

Postgres: Database for storing and retrieving information

Apache Solr: Search engine component to allow users search by different parameters

Bootstrap + jQuery: For implementing frontend validations

Celery: Background tasks and periodic tasks

Scikit Learn: For building classification and ranking model

Revive: Adserver that was used to run Banner campaigns on behalf of partner sites

Nagios: Server monitoring and alerting system

Project Management¶

Entire technology aspect was taken care by our team

Our team followed Agile approach for implementing the project

Our tech team participated in weekly standups

Daily summary reports along with push to staging instances were done

Onboarding of partners by doing technical consulting was also done by our team